Configure your LLM in Datacoves Copilot v2

Create a Datacoves Secret

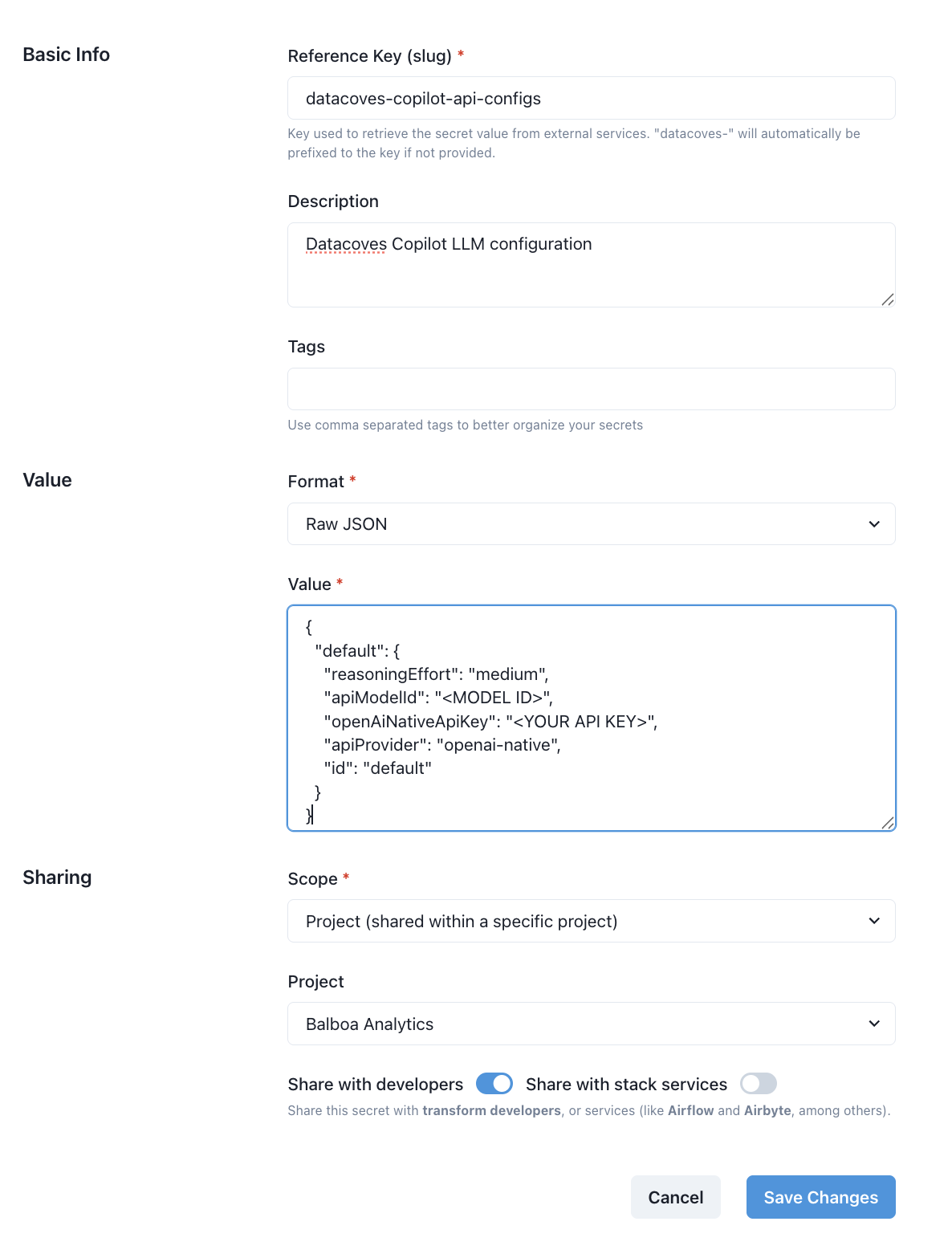

Creating a Datacoves Secret requires some key fields to be filled out:

-

Name:

The secret must be named

datacoves-copilot-api-configs -

Description:

Provide a simple description such as:

Datacoves Copilot config -

Format:

Select

Raw JSON -

Value

: The value will vary depending on the LLM you are utilizing, see

Value formats by LLM Providersection. -

Scope:

Select the desired scope, either

ProjectorEnvironment. -

Project/Environment:

Select the

ProjectorEnvironmentthat will access this LLM.

Lastly, be sure to toggle on the

Share with developers

option so that users with developer access will be able to use the LLM.

Value formats by LLM Provider

Amazon Bedrock

{

"default": {

"apiModelId": "<MODEL ID>",

"awsAccessKey": "<YOUR ACCESS KEY>",

"awsSecretKey": "<YOUR ACCESS SECRET KEY>",

"awsSessionToken": "<YOUR SESSION TOKEN>",

"awsRegion": "<REGION ID>",

"awsCustomArn": "",

"apiProvider": "bedrock",

"id": "default"

}

}

Anthropic

{

"default": {

"todoListEnabled": true,

"consecutiveMistakeLimit": 3,

"apiKey": "<YOUR API KEY>",

"apiProvider": "anthropic",

"id": "default"

}

}

Cerebras

{

"default": {

"apiModelId": "<MODEL ID>",

"cerebrasApiKey": "<YOUR API KEY>",

"apiProvider": "cerebras",

"id": "default"

}

}

Chutes AI

{

"default": {

"apiModelId": "<MODEL ID>",

"chutesApiKey": "<YOUR API KEY>",

"apiProvider": "chutes",

"id": "default"

}

}

DeepSeek

{

"default": {

"apiModelId": "<MODEL ID>",

"deepSeekApiKey": "<YOUR API KEY>",

"apiProvider": "deepseek",

"id": "default"

}

}

Glama

{

"default": {

"glamaModelId": "<MODEL ID>",

"glamaApiKey": "<YOUR API KEY>",

"apiProvider": "glama",

"id": "default"

}

}

Google Gemini

{

"default": {

"apiModelId": "<MODEL ID>",

"geminiApiKey": "<YOUR API KEY>",

"apiProvider": "gemini",

"id": "default"

}

}

Hugging Face

{

"default": {

"huggingFaceApiKey": "<YOUR API KEY>",

"huggingFaceModelId": "<MODEL ID>",

"huggingFaceInferenceProvider": "auto",

"apiProvider": "huggingface",

"id": "default"

}

}

Mistral

{

"default": {

"apiModelId": "<MODEL ID>",

"mistralApiKey": "<YOUR API KEY>",

"apiProvider": "mistral",

"id": "default"

}

}

Ollama

{

"default": {

"ollamaModelId": "<MODEL ID>",

"ollamaBaseUrl": "<BASE URL>",

"ollamaApiKey": "<YOUR API KEY>",

"apiProvider": "ollama",

"id": "default"

}

}

OpenAI

{

"default": {

"reasoningEffort": "medium",

"apiModelId": "<MODEL ID>",

"openAiNativeApiKey": "<YOUR API KEY>",

"apiProvider": "openai-native",

"id": "default"

}

}

OpenAI Compatible

{

"default": {

"reasoningEffort": "medium",

"openAiBaseUrl": "<BASE URL>",

"openAiApiKey": "<YOUR API KEY>",

"openAiModelId": "<MODEL ID>",

"openAiUseAzure": false,

"azureApiVersion": "",

"openAiHeaders": {},

"apiProvider": "openai",

"id": "default"

}

}

Set

"openAiUseAzure": true

when using Azure, and optionally a specific version

"azureApiVersion": "<VERSION>"

.

Fine tune model usage using this additional key:

"openAiCustomModelInfo": {

"maxTokens": -1,

"contextWindow": 128000,

"supportsImages": true,

"supportsPromptCache": false,

"inputPrice": 0,

"outputPrice": 0,

"reasoningEffort": "medium"

}

Open Router

{

"default": {

"reasoningEffort": "medium",

"openRouterApiKey": "<YOUR API KEY>",

"openRouterModelId": "<MODEL ID>",

"apiProvider": "openrouter",

"id": "default"

}

}

Requesty

{

"default": {

"reasoningEffort": "medium",

"requestyApiKey": "<YOUR API KEY>",

"requestyModelId": "<MODEL ID>",

"apiProvider": "requesty",

"id": "default"

}

}

xAI Grok

{

"default": {

"reasoningEffort": "medium",

"apiModelId": "<MODEL ID>",

"xaiApiKey": "<YOUR API KEY>",

"apiProvider": "xai",

"id": "default"

}

}

Example Secret